<center>

# So you want to build a social network?

*Originally published 2024-11-10 by [Nick Sweeting](https://blog.sweeting.me) on [Monadical.com](https://monadical.com/blog).*

---

</center>

> What do prison gangs, US political parties, and Discord servers have in common?

> They all follow the same underlying game theory around trust evolution in groups.

A recent conversation with a friend made me reflect on my 15 years of watching social platforms evolve. Here's what I've discovered about how online communities build trust – or don't.

[TOC]

<center style="opacity: 0.5">(from a random millenial developer's perspective, YMMV)</center>

<br/>

---

<center>

> **Start by playing [The Evolution of Trust](https://ncase.me/trust/) mini-game** (by Nicky Case).

> <a href="https://ncase.me/trust/"><img src="https://docs.monadical.com/uploads/65a24072-fbc8-4ac2-b21d-4c3c5d2f6b74.png"/></a>

> It beautifully explains why trust evolves in groups, why it's game theoretically optimal & not just an artifact of human psychology, and why it can break down.

</center>

---

<br/>

## High-trust communities expect border security

<!--img src="https://docs.monadical.com/uploads/1f8827c7-1398-4a27-ba0c-c7c047115bc3.png" style="width: 25%; max-width: 159px; float: right; border-radius: 14px; box-shadow: 4px 4px 4px rgba(0,0,0,0.2); filter: invert() contrast(1.52);"/-->

<br/><br/>

> Fear around lax borders in *any* social system arises from a deep, primal, game theoretical aversion to risk from potential bad actors.<br/>(physical *OR* digital)

<br/>

- it only takes a small number of bad actors (<10%) for people to lose trust in an entire group

- **when they lose trust they're not really losing trust in "the group", they're losing trust in the enforcement of the borders** / the group's ability to keep bad actors out

- **enforcing strong borders allows people to be more vulnerable** in a group and form deeper connections because they have repeat interactions with friendly actors + **less fear of being hurt by a random bad actor**

- **to maintain high trust, the largest groups with the laxest borders require the most moderation labor**, and [regress to tolerating only the most inoffensive content](https://stratechery.com/2024/metas-ai-abundance/#:~:text=the%20smiling%20curve%20and%20infinite%20content) (e.g. r/funny)

- the corollary is that **the newest, smallest groups need the least moderation, and produce the most unique content**. smaller groups don't want/need heavy-handed moderation tooling, and process complexity / decision fatigue deters them from forming

- writing and enforcing border & internal policy at scale is hard. **once a group is too large, people can no longer agree on everything**. a "bad actor" in the eyes of one is a valuable member in the eyes of another

<img src="https://docs.monadical.com/uploads/d1b66b7b-7fd3-4446-9d97-76e40312eea8.png" style="width: 95%; max-width: 350px; float: right; margin-left: 5px">

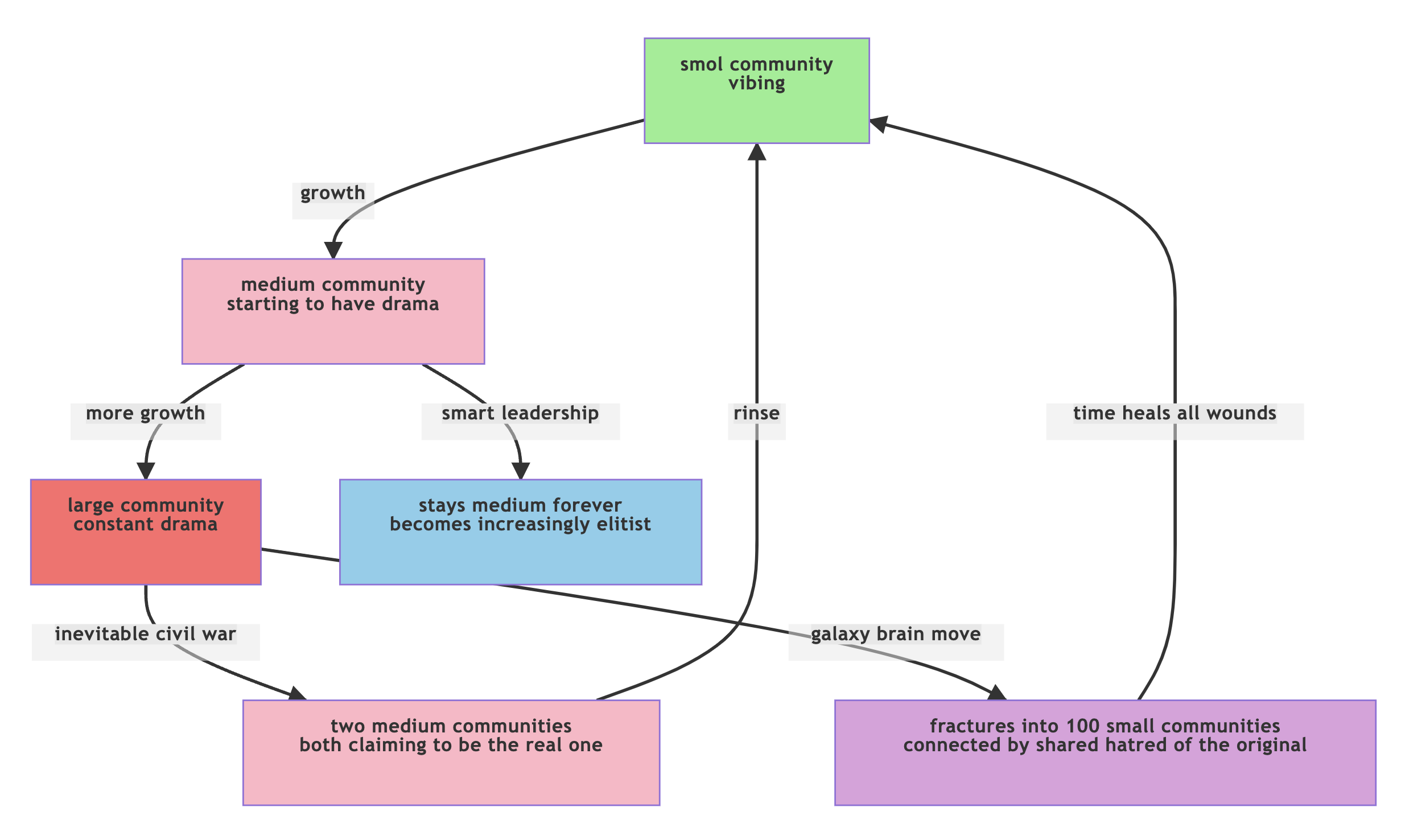

- **larger communities naturally break up and become smaller communities once they hit a critical size**, it's a natural part of all communities of diverse agents, not just humans. Don't try to prevent small groups from growing artificially, and don't become dismayed when big communities regress to the mean. (see [The Geeks, Mops, and Sociopaths](https://meaningness.com/geeks-mops-sociopaths))

- large Discord servers and subreddits often fracture into smaller ones

- people mute noisy public Slack channels and move real discussions to DMs

- DAOs with too many members collapse because democratic consensus is painfully slow

<style>

.social-networks-blog-evolution-container {

display: flex;

gap: 20px;

padding: 20px;

flex-wrap: wrap;

}

.social-networks-blog-evolution-image-container,

.social-networks-blog-evolution-iframe-container {

flex: 1;

min-width: 300px;

}

.social-networks-blog-evolution-image-container img {

width: 100%;

height: auto;

display: block;

}

.social-networks-blog-evolution-iframe-container iframe {

width: 100%;

height: 100%;

min-height: 400px;

border: none;

}

@media (max-width: 768px) {

.social-networks-blog-evolution-container {

flex-direction: column;

}

}

</style>

<div class="social-networks-blog-evolution-container">

<div class="social-networks-blog-evolution-image-container">

<img src="https://docs.monadical.com/uploads/8c175444-e2f1-487e-9702-3d6106ad3bb6.png" alt="social network evolution">

</div>

<div class="social-networks-blog-evolution-iframe-container">

<iframe src="https://sweeting.me/artifacts/group-social-dynamics.html" title="Second content"></iframe>

</div>

</div>

<div style="display: none">

<div style="display: none"><iframe src="https://sweeting.me/artifacts/group-social-dynamics.html" style="float: right; border: 0; height: 390px; max-width: 340px; width: 95%; display: block; margin-top: 120px; margin-right: 2%"></iframe></div>

<div style="width: 100%; max-width: 600px; height:570px;">

<div>mermaid

graph TD

subgraph "The Geeks"

A[artists/makers] -->|create| B[original content]

C[insight havers] -->|create| D[novel ideas]

end

subgraph "The Mops"

E[reposters] -->|extract| F[reputation]

G[commenters] -->|extract| H[attention]

end

subgraph "The Sociopaths"

I[engagement farming] -->|destroys| J[signal-to-noise]

K[grifting/trolling] -->|destroys| L[community trust]

end

B --> E

D --> G

F --> I

H --> K

style A fill:#90EE90

style B fill:#90EE90

style C fill:#90EE90

style D fill:#90EE90

style E fill:#FFB6C6

style F fill:#FFB6C6

style G fill:#FFB6C6

style H fill:#FFB6C6

style I fill:#FF6B6B

style J fill:#FF6B6B

style K fill:#FF6B6B

style L fill:#FF6B6B

</div>

</div>

</div>

<br/>

- **people that never rebuke bad actors are net negative for most groups** / too many people trying to practice "0% interest altruism" or hyper-tolerance erode net trust slowly

<img src="https://docs.monadical.com/uploads/85410551-29a3-4ca4-8545-7cf6e62089ac.png" style="float: right; width: 155px; border-radius: 14px; margin-top: 10px; margin-left: 4px"/>

- they allow existing bad actors to thrive

- they're a big magnet drawing "opportunistic" bad actors / <br/> new bad actors to the group

- they undo the benefits of border enforcement & effective moderation by giving airtime + validation to the bad actors they engage with in group contexts

- leaky borders lower net safety for the *most vulnerable members first*

- **the most vulnerable / most fear-prone members will flee early**, and at first they will flee quietly to avoid being followed, eventually leaving only abusers + hyper-tolerants

- **this is a hard pill to swallow** for high-resilience + high-openness (+ high-privilege) people who don't personally experience the downsides of the Be Nice To Everyone and Forgive Everything™️ approach (myself included, this was rough to learn)

<br/>

- those that leave early sometimes go on to regroup around their shared fear of the "BAD" they fled, forming a new group defined only by their intolerance of the first!

<center><img src="https://docs.monadical.com/uploads/227f7169-e517-447b-86a7-1e35d2681dcf.png" style="text-align: center; width: 90%; max-width: 350px; margin-left: 4px"/></center>

<br/>

- *perceived strictness* towards bad actors / around borders is often more important than actually doling out harsh punishment 100% of the time. if people *feel safe and protected* by peers & platform, they wont try to micromanage border & moderation policy as much

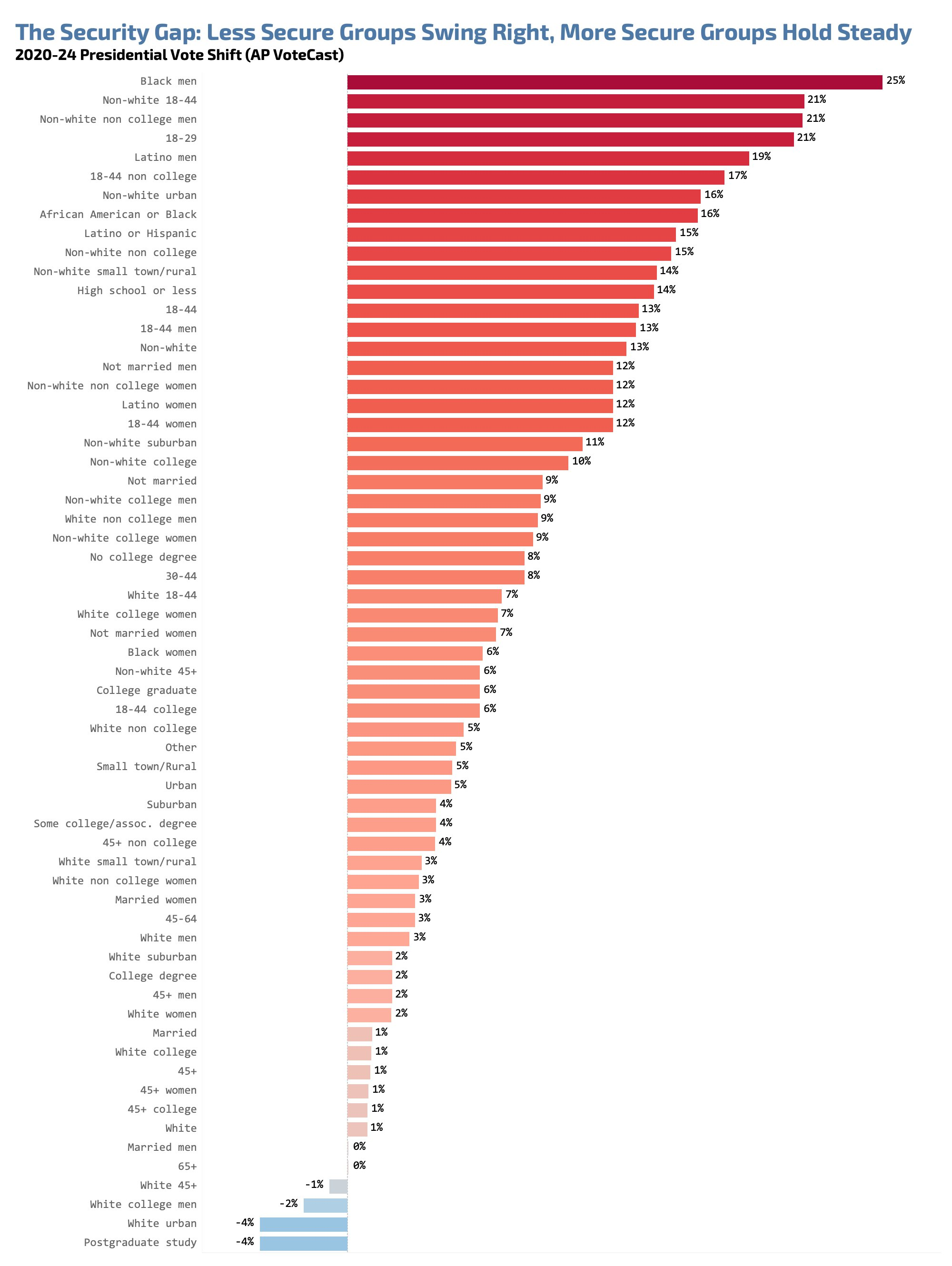

- political parties that don't make a believable *show* of being "tough on borders" / "tough on crime" end up inadvertendly breeding authoritarians that stoke fear + promise safety.

<center><img src="https://docs.monadical.com/uploads/38fe4350-1245-4d3b-82ea-5437fe77b47c.png" style="width: 95%; max-width: 390px; border-radius: 14px;"/></center>

- "bad actor" is subjective, defined by the group. the reality of large groups of humans is that there is no objective morality / "right" and "wrong" that everyone can agree on. what some people view as heinous/despicable behavior is a cultural norm for others, which brings me to the next point...

<br/>

---

<div style="display: none">

```mermaid

graph TD

A[smol community<br/>vibing] -->|growth| B[medium community<br/>starting to have drama]

B -->|more growth| C[large community<br/>constant drama]

C -->|inevitable civil war| D[two medium communities<br/>both claiming to be the real one]

D -->|rinse| A

B -->|smart leadership| E[stays medium forever<br/>becomes increasingly elitist]

C -->|galaxy brain move| F[fractures into 100 small communities<br/>connected by shared hatred of the original]

F -->|time heals all wounds| A

style A fill:#90EE90

style B fill:#FFB6C6

style C fill:#FF6B6B

style D fill:#FFB6C6

style E fill:#87CEEB

style F fill:#DDA0DD

```

</div>

> This growth and split pattern shows up EVERYWHERE - from religions splitting into denominations, to programming language communities, to political movements, to literally any group of humans (any group of agents?) trying to coordinate at scale

---

<br/>

## Diversity is important, and conflict is part of diversity

- **diversity is the saving grace of humanity**, it's how we survive viruses (medical *and social*)

- there must be a **mechanism for people to feel safe disagreeing** (e.g. **privacy**), usually by providing ways to restrict audience/engagement to specific groups/spaces

- **minority opinions cannot sprout when there is no escape from loud majority**. if a platform feels oppressively homogenized or censored, it won't attract diversity of thought

- there must be a way for people to **defend the boundaries of their spaces**, enforce the rules they decide on within, and evict repeat violators

- **freedom of movement is crucial**, people must be allowed to vote with their feet so that good spaces grow and unhealthy spaces fizzle out naturally. don't force people to stay in past groups / restrict joining new ones. Nicky Case has [another great game about this <img src="https://docs.monadical.com/uploads/44f87d9b-34d0-43ba-a77e-69f030f5a364.png" style="height: 38px"/>](https://ncase.me/polygons/)

- **["violence"](#Glossary) (coercion through force) is an inevitable feature of diversity**, not a bug

- when groups disagree over allocation of finite resources (land, attention, votes, $, etc.), someone will eventually try force instead of consensus

- this isn't because people are "evil", it's because consensus is *expensive* (it takes time, it kneecaps big ambitions, and it slows political momentum)

- platforms can **discourage violence** by:

- making consensus paths *cheaper* (clear rules, arbitration help, voting mechanisms)

- making violence *costlier* (platform-wide bans, legal action, collective punishment)

- fostering HIGH TRUST within groups (safe borders = less paranoid groups)

- but **they shouldn't try to eliminate conflict entirely**

- platform-level intervention often causes more harm than [letting conflicts play out](https://arstechnica.com/gadgets/2025/01/reddit-wont-interfere-with-users-revolting-against-x-with-subreddit-bans/)

- over-moderation degrades perception of platform neutrality / group autonomy, eventually driving people to less-moderated / "more private" platforms

- some level of conflict between diverse groups is natural and healthy

<br/>

**Watch out...**

- **perfectly secure groups = perfectly isolated echo chambers**

- insular communities (from superleftists to neo-Nazis) can thrive in isolation, but they stop being challenged by dissenting views. they end up fermenting in their reality distortion fields until they start spewing members in [CRUSADER MODE](https://en.wikipedia.org/wiki/Vote_brigading) who blindly attack others

- platforms need some shared public COMMONS where different people are forced to interact and see that the "OTHER"s they fear are still human too (like a city subway)

- our capacity to relate to others builds from our repository of observed feelings

- when we have no exposure to other people's realities beyond our own safe bubbles, our empathy muscles go unstretched and can start to atrophy

- groups with high trust in their moderation *can* be more resilient to fascist rhetoric, but it's not perfect... you also have to independently deal with the phenomena of ~~[people tending to get more conservative & risk-averse as they get older/richer/less exposed to diversity](https://www.quora.com/Why-do-many-wealthy-and-influential-individuals-tend-to-hold-conservative-beliefs-Are-there-any-notable-exceptions-to-this-trend)~~ Edit: this may be just a [cohort effect among specific generations](https://scholar.google.com/citations?view_op=view_citation&hl=en&user=4ZrsaM4AAAAJ&citation_for_view=4ZrsaM4AAAAJ:tzM49s52ZIMC), but cohort effects are real too!

- groups can be high-trust within their own borders, but maintain an aggressive strategy of distrust in their interactions with *other groups*. this can be an effective strategy to fiercely protect these pockets of high-trust, but it can lead to [McCarthyism](https://en.wikipedia.org/wiki/McCarthyism) and genocide at scale

<br/>

<br/>

<div style="display:none">mermaid

graph TD

D[Freedom of Speech] -->|attracts| A[Diversity]

A -->|creates| B[Conflict]

B -->|requires| C[Moderation]

C -->|limits| D

style A fill:#90EE90

style B fill:#FFB6C6

style C fill:#FF6B6B

</div>

<div style="text-align: center;">

<img src="https://docs.monadical.com/uploads/136f811b-07c7-49c3-8401-1778c8646caa.png" alt="freedom of speech and moderation" style="max-width: 100%; max-height: 500px;">

</div>

---

<br/>

## Moderation requires *human* sacrifice

- moderation is 3 responsibilities:

- **labor** (actually sorting through content / abuse reports and making decisions)

- **governance** (writing+evolving policies through consensus processes *or dictatorship*)

- **accountability** (being held responsible when things go wrong)

- **moderation has to be compensated** or no one will do it. but... it can be paid via:

- **money**, OR...

- **clout** / social adoration (e.g. soldiers, reddit mods, etc. are underpaid $ but revered)

> "anytime you see a category of people being revered by society, it means they're underpaid financially. e.g. we were told to thank and respect emergency workers during COVID (because they were underpaid), we're told to thank and respect soldiers, teachers, etc." the same is true for unpaid moderators / elected leaders

- <span style="font-weight: 600; font-size: 0.98em">distributed moderation</span> where everyone is a tiny bit responsible for everything doesn't work

- **no one to blame** when things go wrong, which is important because otherwise people blame the entire group/platform and you get a net low-trust community as a result

- **bystander effect**, no one steps up to make hard decisions when they're needed

- lack of **confidentiality** to process sensitive moderation tasks (e.g. removing CSAM, revenge porn, etc. tasks where the whole group doesn't need to know all the details)

- lack of **consistency**/high-level vision means people lose trust in the group because they can't predict whether mod outcomes will be in their favor / "safe" in the future

- governance via direct democratic voting on *all* policy changes usually fails similarly

<br/><center><img src="https://docs.monadical.com/uploads/d4aaaf8e-cd30-476e-b9fe-654c57a6b438.png" style="width: 95%; border-radius: 10%; max-width: 320px; opacity: 0.79"/></center><br/>

- **moderators need to be incentivized to *continue*** acting in their group's best interests

- this is commonly known as the ["Principal-Agent Problem"](https://en.wikipedia.org/wiki/Principal%E2%80%93agent_problem)

- power corrupts & petty power corrupts absolutely. some mods grow bitter & power-trip

- transparency helps, but if there's too much transparency everyone will have an opinion and the mods may get burnt out even sooner ("too many chefs")

- should platform-level mechanisms exist for the group to evict bad moderators / *move* their content to a new space?

- if moderation is assessed by metrics (either for rewarding labor or steering the algorithm), how do you prevent bad actors or AI agents from focusing their effort on gaming the metrics ([Goodhart's Law](https://en.wikipedia.org/wiki/Goodhart%27s_law?))? Also how do you avoid over-engineering systems to prevent metric gaming? Too much "distrust by default" will scare good actors away.

<div style="display: none">mermaid

graph TD

subgraph "mod archetypes"

S[Founding Member] -->|runs on| T[Paternal Instinct]

M[Passionate Newbie] -->|burns out in| N[3-6 months]

Q[Hired Help] -->|needs| R[Actual Salary]

O[Power Tripper] -->|stays for| P[Attention/Control]

end

style M fill:#90EE90

style N fill:#FFB6C6

style O fill:#FF6B6B

style P fill:#FF6B6B

style Q fill:#90EE90

style R fill:#FF6B6B

</div>

<div style="text-align: center;">

<img src="https://docs.monadical.com/uploads/0d63d633-7351-4c7b-9ee9-86738602376e.png" alt="mod archetypes" style="max-width: 100%; max-height: 300px;">

</div>

- distributed filesystems (BitTorrent, IPFS, Filecoin) are an **example of what happens in public forums with no moderation**

- it's VERY hard to remove something from filecoin/bittorrent/etc. because there's no one person you can ask, you have to go through a list of thousands of seeders OR publish a PUBLIC "deletion request" that announces to the world that you would "very much like if everyone would please kindly delete XYZ content, and **please don't look at it too closely before deleting it**"

- as a result most individuals and corporations are unwilling to use public distributed storage because they fear losing control of their data / it leaking out into a low-trust public sphere where **they might be hurt by some bad actor**.

- people would rather share their photos with their facebook friends / twitter followers / any moderated space where at least they have some **control over who sees it + the ability to delete** it themselves OR request a trusted moderator to delete it for them

- **0-moderation platforms become a magnet for all the bad actors** and worst types of content, as they learn there is no punishment here. the most impressionable users on these platforms start behaving poorly as they become desensitized to unrebuked bad behavior, and the [Overton Window](https://en.wikipedia.org/wiki/Overton_window) of acceptable behavior starts to shift platform-wide.

- **technical solutions cannot replace human moderators**, but they can augment them

> A COMPUTER CAN NEVER BE HELD ACCOUNTABLE, THEREFORE A COMPUTER MUST NEVER MAKE A MANAGEMENT DECISION [-- *1979 quote from IBM*](https://x.com/bumblebike/status/832394003492564993/photo/1)

- moderators can **use AI tools to speed up the review/sorting** of content

- but groups still **need to hold someone accountable**/know who to talk to **when the AI tools make incorrect judgements**, and *[someone needs to decide which models to use](https://monadical.com/posts/Trustless-is-A-Myth.html)*

- **community libraries of AI classifier models** could be developed, shared, and ranked, allowing groups (or individual users) to pick and choose their automated policies locally

<br/>

---

<div style="display: none">mermaid

graph TD

A[authentic content] -->|gets attention| B[attracts optimizers]

B -->|they build optimization tools| C[optimization becomes easy]

C -->|forces everyone to optimize| D[all signals compromised]

D -->|people seek authenticity| E[new platform appears]

E -->|cycle repeats| A

style A fill:#90EE90

style B fill:#FFB6C6

style C fill:#FF6B6B

style D fill:#FF6B6B

style E fill:#90EE90

</div>

<div style="text-align: center;">

<img src="https://docs.monadical.com/uploads/06bc8cd6-f489-4cde-9df8-09c9ca5d228d.png" alt="authentic content cycle" style="max-width: 100%; max-height: 600px;">

</div>

---

<br/>

## Reputation is useless if new identities are free

- When groups exceed [Dunbar's Number](https://en.wikipedia.org/wiki/Dunbar%27s_number) (~200), **users need help knowing who to trust**

- Platforms desiring high-trust need to **provide systems to track reputation** (e.g. Uber ⭐️ ratings, Reddit karma, Twitter's # of followers, ☑️ checkmarks, crypto wallet balances, etc.)

- some platforms also provide **reputation/score of individual pieces of content**, usually separate from the reputation of the author. they can also be linked too (e.g. reddit karma)

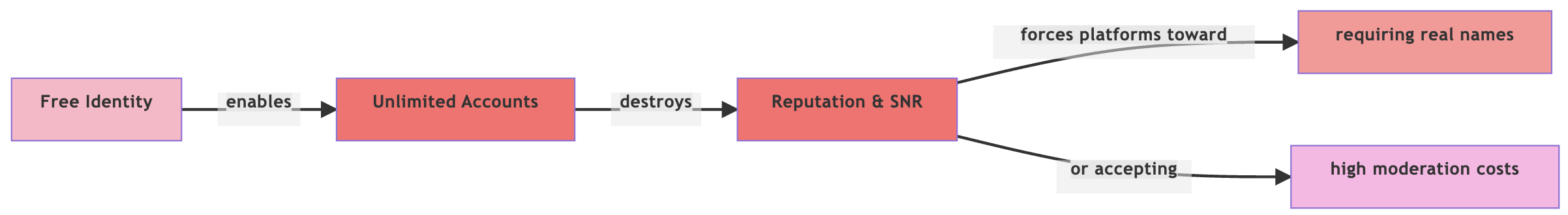

- Peer-nomination/**voting-based reputation is worthless if *creating a new identity* is free**

- how do you know if a user/post with 1,000 points got 1 vote each from 1,000 real people, or from 1,000 fake bot accounts?

- how do you know if the 200 comments arguing under some post are separate people, or one person with a bot farm and an agenda to push?

- how do you prevent 51% [Sybil attacks](https://en.wikipedia.org/wiki/Sybil_attack) when voting on governance/moderation issues?

- **You can delegate the management of identity** creation/rate-limiting/revoking to others

<img src="https://docs.monadical.com/uploads/2a6b6b75-580b-49b8-b757-8f5faaf179ad.png" style="width: 90%; border-radius: 15px; max-width: 190px; margin-right: 20px; margin-left: 10px; margin-top: 25px; float: right"/>

- use gmail account to sign up -> delegating identity management to Google

- use phone number to sign up -> delegating identity to cellular provider

- provide driver's license to sign up -> delegating identity management to the state

- link crypto wallet / credit card to sign up -> delegating identity to blockchain / banks

- ... you punt a lot of abuse/hacking risk to them, but you also give up *a lot* of control

- The identity provider has to put up **some barrier to make identity creation costly**, e.g.

- time (e.g. fill out this form to sign up, wait XYZ time for account to activate, etc.)

- effort (e.g. solve these CAPTCHAs, mail in this document, wait in line at the DMV, etc.)

- cost (pay some $$$ to get an account, pay $10 to get a "validated" checkmark, etc.)

- biometrics (e.g. provide your fingerprint, DNA, face scan, birth certificate, etc.)

- social (e.g. you must get at least 2 referrals from other users, enter an invite code, etc.)

<center><a href="https://transmitsecurity.com/blog/recommendations-for-mitigating-risk-at-each-stage-of-the-machine-identity-lifecycle"/><img src="https://docs.monadical.com/uploads/d1b1c820-d709-4c5c-b26e-00733c5dcad0.png" style="max-width: 690px; width: 95%"/><small style="font-size: 0.5em">transmitsecurity.com/blog/recommendations-for-mitigating-risk-at-each-stage-of-the-machine-identity-lifecycle</small></a></center>

- Your platform can allow **full anonymity, no anonymity, or something in between**:

- `full anon`: no stable username, no reputation, no verified accts. (4chan, BitTorrent)

- **`pseudonymous`: stable username w/ reputation, but no real-name policy** (X, Reddit)

- `partial`: the platform knows who you are IRL, other users do not (Blind, Glassdoor)

- `real-names only`: platform-enforced 1 real person = 1 acct. (FB, LinkedIn, work Slack)

- Each approach to anonymity and identity has tradeoffs, choose carefully...

- **anon users behave worse** ([Online Disinhibition Effect](https://en.wikipedia.org/wiki/Online_disinhibition_effect))

- **but real-name policies kill dissent**, edgy ideas, & creativity ([LinkedIn, FB, Quora](https://www.internetmarketingninjas.com/blog/social-media/real-name-policies-on-social-media/))

- **you can't easily ban or limit bad actors if accounts are anonymous & free** (Email)

- but you can't attract artists / whistleblowers / dissenters if real names are required

- if you claim to provide real name verification, you have to wage an endless war against impersonation attacks (`Visa Support` Caller-ID, `Elon is giving out free ₿tc`).

- if you allow verifying by ID OR *money*, then verification loses its value (see X.com ☑️s)

- if you don't offer *any* brand verification, celebrities and corporations may not want to use your platform as they cannot differentiate themselves from a sea of impostors

- knowing real identities makes you a target for ID theft hacking

- **Most public platforms settle on pseudonymous identity** (e.g. stable identity that has a small cost to create + can be tracked with reputation, w/ opt-in real name verification)

- Any **failure to consistently enforce policies** around identity and reputation **will lead to users losing trust in peers, moderation systems, and eventually the entire platform**

<br/>

<div style="display: none">mermaid

graph LR

A[Free Identity] -->|enables| B[Unlimited Accounts]

B -->|destroys| C[Reputation & SNR]

C -->|forces platforms toward| D[requiring real names]

C -->|or accepting| E[high moderation costs]

style A fill:#FFB6C6

style B fill:#FF6B6B

style C fill:#FF6B6B

style D fill:#FF9696

style E fill:#FFB6e6

</div>

<br/>

<img src="https://docs.monadical.com/uploads/04b0a313-4144-49d0-a945-1e46f25cc326.png" style="width: 99%; opacity: 0.35;"/><br/>

<br/>

## Should users share-by-Copy or share-by-Reference?

- you have to **decide if users will share content by sending a *COPY*, or a *REFERENCE***

<!--center><img src="https://docs.monadical.com/uploads/7b5e4abe-179f-4ed6-9de4-7c2c9e47e304.png" style="width: 80%; max-width: 300px"/></center-->

- **share-by-COPY** -- e.g. Email, SMS, WhatsApp, iMessage, Signal, Mastodon, BitTorrent

<img src="https://docs.monadical.com/uploads/37192162-dc99-41d6-8210-d46c2d08ff95.png" style="width: 80%; max-width: 130px; float: right; margin-top: 25px; margin-left: 30px; margin-right: 60px; opacity: 0.2"/>

- best when anonymous or only sharing w/ specific users (email "To:"/"CC:" field / select ____ users to send to)

- **pros:** censorship-resistant, resilient, full content survives if even one reader has a copy, no draconian API limits

- **cons:** hard to monetize, impossible to delete mistakes/revenge-porn/CSAM once public

<br/>

- **share-by-REFERENCE** -- e.g. FaceBook, TikTok, Insta, X, Discord, Slack, Google Docs

<img src="https://docs.monadical.com/uploads/6639b21f-6444-4f6d-a5b5-dbf82f87ac8c.png" style="width: 80%; max-width: 140px; float: right; margin-top: 26px; margin-right: 60px"/>

- best for large, public, noisy forums, <br/>*risky* for very small or oppressed communities

- **pros:** easier moderation, artists keep ownership of their own creations, corporations & creators more willing to publish original content if they can create exclusivity, limit audiences, block trolls, **monetize** wo/ piracy, etc.

- **cons:** content [dies easily](](https://news.ycombinator.com/item?id=39476074)) from account bans, sites going down, state censorship

<br/>

- obviously in all systems people can choose to *manually share a link/reference* or to *manually copy* content (e.g. by taking a screenshot, copy+pasting, or forwarding)

- but the DEFAULT sharing mechanism shapes the whole the platform's future

<br/>

<div style="display: none">mermaid

graph TD

subgraph "share by reference"

A[Creator Control] -->|enables| B[Paid Content]

B -->|but risks| C[Content Death]

end

subgraph "share by copy"

D[Content Survival] -->|enables| E[Censorship Resistance]

E -->|but risks| F[Harmful Spread]

end

style A fill:#90EE90

style B fill:#90EE90

style C fill:#FF6B6B

style D fill:#90EE90

style E fill:#90EE90

style F fill:#FF6B6B

</div>

<div style="text-align: center;">

<img src="https://docs.monadical.com/uploads/73feba2e-f35a-40af-b827-f5623479d97b.png" alt="share by copy" style="max-width: 100%; max-height: 400px;">

</div>

---

<br/>

## There is no such thing as 0 platform bias / 100% transparency

- **state-level compliance & court rulings will force you to behave inconsistently**

- pick your poison: **comply with CCP censorship** / EU data laws / US subpoenas / DMCA / etc. **OR be fugitive operators & users within those countries**

- biased enforcement or partial compliance = loss in platform trust or censorship by state

- gag orders & privacy laws will prevent you from being fully transparent with the public

<br/>

- **100% diversity / free-speech absolutism with no platform bias is a [Faustian promise](https://en.wikipedia.org/wiki/Deal_with_the_Devil)**

- **"we're neutral/free-speech absolutist" backfires [when enforced inconsistently](https://arstechnica.com/security/2024/07/cloudflare-once-again-comes-under-pressure-for-enabling-abusive-sites/)**

- don't carve platform policies in stone lest ye be entombed by them in 5yr;

public opinion, business needs, and the [whims of the](https://apnews.com/article/x-musk-blocking-change-harassment-2f31853749daeb86db70db53f8b1f62f) [CEO change](https://about.fb.com/news/2025/01/meta-more-speech-fewer-mistakes/)

<center><a href="https://www.hollandsentinel.com/story/opinion/editorials/2017/08/20/with-freedom-speech-comes-great/19409095007/"><img src="https://docs.monadical.com/uploads/37ccdf7d-a933-4b40-ba66-4afe100bd482.png" style="width: 90%; max-width: 560px;margin: 5px"/><br/><small style="font-size: 0.5em">hollandsentinel.com/story/opinion/editorials/2017/08/20/with-freedom-speech-comes-great/19409095007/<br/><br/></small></a></center>

- terrorists, fraudsters, hate groups, and bots will try to use your platform. they'll spam, they'll want support. it'll lead to subpoenas & bad PR. do you [help, ignore, or ban](https://en.wikipedia.org/wiki/Controversial_Reddit_communities) them?

- **Web3/distributed apps are not magically immune to the court of public opinion**, critics & prosecutors will [find a **person** to hold accountable](https://www.forbes.com/sites/digital-assets/2024/08/25/telegrams-founder-arrested-what-it-means-for-popular-messaging-app/) when platforms misstep... beyond just CEO, who writes smart contracts? who is hosting? who funds payroll?

<br/>

- **being *too transparent* / *too democratic* can backfire, harm trust, & unravel ambitions**

- some moderation decisions need context that can't be made public (DMs, [PII](https://www.law.cornell.edu/uscode/text/18/2258A#:~:text=information%20relating%20to%20the%20identity%20of%20any%20individual%20who%20appears%20to%20have%20violated), etc.)

- full transparency promises create mistrust when exceptions emerge (e.g. [gag-orders](https://en.wikipedia.org/wiki/Warrant_canary))

- too many cooks / [design-by-committee](https://en.wikipedia.org/wiki/Design_by_committee) / [having to defend all choices in public blocks rapid progress](https://www.law.com/dailybusinessreview/2024/09/17/eleventh-circuit-upholds-limits-on-public-comments-during-city-council-meetings/?slreturn=2024112343342) (giant orgs like Apple love to limit internal process visibility for a reason)

- when leadership feels paralized because their every move is watched and critizised, they resort to using communication side channels like [Signal DMs and in-person meetings](https://www.theverge.com/2024/4/26/24141801/ftc-amazon-antitrust-signal-ephemeral-messaging-evidence), eventually [*normalizing* a culture of subverting transparency](https://calmatters.org/politics/2024/09/california-secret-negotiations-public-transparency/)!

- orgs need "secret" channels to internally call out non-compliance before it becomes an issue. fear of leakers airing every bit of internal dirty laundry means that issues simply don't get called out internally. in functioning companies, the path to get issues fixed internally should be *more attractive* than [running to a journalist and whistleblowing](https://x.com/patio11/status/1898020156770525629)!

<div style="display: none">mermaid

graph TD

A[Platform Claims Neutrality] -->|attracts| B[All Types of Users]

B -->|including| C[Problematic Groups]

C -->|forces| D[Take a Stance]

D -->|contradicts| A

style A fill:#90EE90

style B fill:#FFB6C6

style C fill:#FF6B6B

style D fill:#FF6B6B

</div>

<div style="text-align: center;">

<img src="https://docs.monadical.com/uploads/943fd277-63b6-4ee3-8d6e-44e155ba540a.png" alt="platform neutrality" style="max-width: 100%; max-height: 500px;">

</div>

<center><small>Of course there's <a href="https://www.explainxkcd.com/wiki/index.php/1357:_Free_Speech">an XKCD for this too: #1357 Free Speech</a>.</small></center>

<br/>

---

<br/>

## Extra food for thought...

<br/>

#### Social network platforms are like countries, and their subgroups are like states

<div style="display: none">mermaid

graph TD

subgraph " "

A[Content Rules] -->|==| B[Laws]

C[Moderation] -->|==| D[Police/Courts]

E[User Identity] -->|==| F[Citizenship]

G[Group Borders] -->|==| H[Immigration Policy]

end

style A fill:#90EE90

style B fill:#FFB6C6

style C fill:#90EE90

style D fill:#FFB6C6

style E fill:#90EE90

style F fill:#FFB6C6

style G fill:#90EE90

style H fill:#FFB6C6

</div>

<div style="text-align: center;">

<img src="https://docs.monadical.com/uploads/5d03742c-ed3a-40b9-8d0c-400c28df7198.png" alt="social countries" style="max-width: 100%; max-height: 300px;">

</div>

By now you have likely realized that **all of the issues digital groups face are the same issues that countries face**: governance, border enforcement, policing, peaceful dispute resolution vs military action, deciding what freedom of speech is allowed and what is dangerous harassment / libel / disinformation, limiting fake identity creation, preserving history as storage degrades, etc.

*But what are some of the ways that digital platforms are different?*

<br/>

---

<br/>

#### *Most* apps end up becoming social apps eventually

Many different types of platforms inadvertently end up with social network dynamics, whether they set out to be a "social network" or not. Information wants to be free, if your app contains valuable information and people spend time using it, people will figure out a way to share it.

- a finance & accounting tool that lets ppl edit forms and spreadsheets together? **yup.**

- a mailing list for technical discussions about linux kernel development? **yup.**

- a childcare app that lets mothers discuss issues / get community support in-app? **yup.**

- a videogame where kids can chat + share screencaps while mining virtual coal? **definitely.**

- a personal AI tool that lets you query other people's personal AI's? **absolutely**

Whether you're trying to build something like these ^ or something "completely new that the world has never seen", if your app involves connecting people with other people in any way, you're probably going to see some of these challenges.

<br/>

---

<br/>

#### Should I "care about those nearest to me" or "try to care about everyone"?

<br/>

<center>

<img src="https://docs.monadical.com/uploads/a1e07817-28fc-4d96-95f8-ce55bf973d4d.png" style="width: 90%; max-width: 550px"/><br/>

<small style="font-size: 11px"><a href="https://www.researchgate.net/figure/Heatmaps-indicating-highest-moral-allocation-by-ideology-Study-3a-Source-data-are_fig6_336076674">researchgate.net/figure/Heatmaps-indicating-highest-moral-allocation-by-ideology-Study-3a-Source-data-are_fig6_336076674</a></small>

</center><br/>

People often self-sort into these categories situationally - both approaches can be valid depending on context. When these mindsets collide, predictable trust dynamics emerge:

- **"conservatives" see "liberals" as dangerously naive** for extending trust too broadly

- **"liberals" view "conservatives" as morally stunted** for concentrating care locally

- both sides often metabolize their default bias into *crusades* when poked: conservatives protect their sacred inner rings, liberals expand their circle of moral consideration

- neither grasps how the other's strategy evolved as a valid survival mechanism

- **either strategy taken to its extreme is a recipie for disaster**, social evolution gave us both for a reason. the tension between them forces us to find a balance as different subgroups within our species oscillate between survival and abundance

When designing trust systems at the platform level, honor both patterns - they're just different paths that people follow to protect and honor what they value. Platforms don't have the ability to ever know the full context when making moderation decisions.

<br/>

> "Everyone you meet is fighting a battle you know nothing about. Be kind."<br/> -- Ian MacLaren <small style="display: inline">or <a href="https://old.reddit.com/r/QuotesPorn/comments/hyvlol/everyone_you_meet_is_fighting_a_battle_you_know/">Robin Williams?</a></small>

<br/>

---

#### What if a social platform existed that had group size limits & exclusivity as a core dynamic?

- groups could be capped to 255 posting members at a time (w/ more read-only viewers)

- each person could be a *posting member* (or moderator) of only 255 groups at a time

- Capping total participants and reach means content gets filtered for quality, small groups can more easily self-moderate, remember each other, and hopefully treat each other better

- groups have to scale by making new groups, hierarchies of groups in trees may even form

- each group of 255 could have 5 moderation seats, which are the first 5 members initially. mods can step down at any time or be voted out by supermajority, which just fills the next person in line from a continuously-updating ranked-choice list that users can vote on.

...

- *and now what if each group has their own **cryptocurrency!**, and you could trade all the groups tokens in a global exchange. the most exclusive groups that are the hardest to get into would be valued the highest, supergroups would be like indexes, you could short groups with options, etc...* /s

jk jk

<center>

</center>

<br/>

---

#### Glossary

Before you write an angry comment about any of the words I chose for the concepts above, understand these are the working definitions I intended in this context:

- **`platform`**: the social platform, app, or other medium that your users communicate through

- **`group`**: a shared space for users and their content on your platform, using whatever mechanism your platform provides for this (e.g. a subreddit, mailing list, channel, etc.)

- **`moderator`**: someone with the power to decide what content / users are allowed in a group

- **`vulnerability`**: willingness to take risks in communication without fear of personal attack

- **`diversity`**: presence of differences in background & opinion (not just race/gender)

- **`bad actor`**: a person who acts against a group's interests, *from the group's perspective*

- **`"violence"`**: attempts at physical *or digital* coercion through overwhelming force instead of consensus / collaboration processes

- **`"conservatives"`** == `risk averse / prioritize ingroup`

- **`"liberals"`** == `risk tolerant / strive for outgroup = ingroup`

<br/>

---

<br/>

#### Further Reading

- <img src="https://docs.monadical.com/uploads/44f87d9b-34d0-43ba-a77e-69f030f5a364.png" style="height: 38px; margin-bottom: 5px"/> https://ncase.me/trust/ and https://ncase.me/polygons/ A+ mini-games by Nicky Case

- ⭐️ https://meaningness.com/geeks-mops-sociopaths

- ⭐️ https://monadical.com/posts/Trustless-is-A-Myth.html

- ⭐️ https://docs.sweeting.me/s/trust-and-relationships

- ⭐️ https://apenwarr.ca/log/20211201 (100 years of whatever this will be)

- ⭐️ https://www.jofreeman.com/joreen/tyranny.htm

- https://www.browserbase.com/website/blog/separating-trust-from-threat

- https://www.lesswrong.com/posts/tscc3e5eujrsEeFN4/well-kept-gardens-die-by-pacifism

- https://stratechery.com/2024/metas-ai-abundance/#:~:text=the%20smiling%20curve

- https://x.com/itsannpierce/status/1859351663330595185

- https://ourworldindata.org/trust

- https://maxread.substack.com/p/is-web3-bullshit

- https://docs.sweeting.me/s/decentralization

- https://mnot.github.io/avoiding-internet-centralization/draft-nottingham-avoiding-internet-centralization.html#name-the-limits-of-decentralizat

- https://docs.sweeting.me/s/principles-handbook#3-Trust-and-Verify

- https://docs.monadical.com/s/love-and-ambiguity

- https://monadical.com/posts/Downswings-and-the-Information-Game.html

- https://adventurebagging.co.uk/2012/04/altruism-versus-self-interest/

- https://reagle.org/joseph/2010/conflict/media/gibb-defensive-communication.html

- https://alexdanco.com/2021/01/22/the-michael-scott-theory-of-social-class/